Image courtesy of WikiMedia Commons

Caching for Improved Availability

What's upstream flows downhill

The saying "No man is an island entire of itself" also applies to the systems we build. From the simplest website to the most complicated enterprise architecture every system has upstream dependencies and those dependencies carry the possibility of failing. When upstream dependencies fail those downstream are affected ultimately dumping the problem on the end user.

The question is do downstream dependencies have to fail or can we mitigate the issue somehow?

Let's get specific

To jump right to the code take a look at sample code in Python.

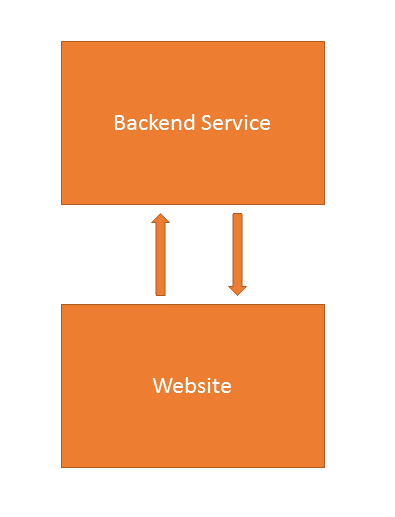

Let's take a look at a specific example. Let's say we have a website that talks to a backend service. The service could be ours or a third party or even a database. The website provides information to the user and in our case there is no degraded experience we can present to the user if the service doesn't respond.

In this example the website talks directly to the backend using the default "call and reply" style. Yes there are other strategies to reduce the coupling between these systems but that's not what we are talking about today. The system is already built so rewriting everything in a new architecture for this issue is overkill.

What could go wrong?

The way it works today when the backend service goes down then the website will throw an error the next time it tries to call the service. Maybe the error is being handled somehow and a slightly more user-friendly message is shown but it still means the user is looking at an error until the backend service is restored. Now you could point your users to the SLA agreement the backend service so helpfully provides but it probably won't make them any happier since they came to use your website not to play uptime police.

Let's take look at how the default caching strategy could help improve things.

Time based caching

By default most caches provide time based expiration. The internals are different per cache implementation by this is the cache expiration policy of least resistance. Stick something in the cache and give it a seemingly reasonable expiration, say 1 hour, and move onto the next story in the backlog.

So what happens when the backend service goes down with your caching strategy in place?

Time bomb

Now the website has a time bomb. Since we don't know when the data was cached we don't know when it will timeout. If the backend service doesn't come back up before the cached data expires then we have just delayed the exploding error.

For all we know there was 1 minute left before cache expiration when the backend service went down. The only thing we gain using this strategy is a false sense of security.

Version based caching

Odds are you are already familiar with version based cache expiration. Every modern web browser has advanced caching based on both versioning and timeouts. Basically instead of blindly expiring and throwing away what we currently have in the cache we can check to see if a new version exists. If it does then put that one in the cache otherwise keep using what we already have cached. This is a more complicated expiration policy but more realistic for most systems.

By letting the website control the cache expiration we give it the ability to continue operation with stale information until the backend service comes back online.

Wrapup

Try out the python and flask example and see how it works.

Version based cache expiration makes sense in this context so it might be worth giving it a shot. Systems fail all the time so it's more a matter of being prepared to handle the failure gracefully than trying to stop all possibility of failure.

Good luck and happy caching!